diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

index 9ca185e89..cb41880c9 100644

--- a/.github/workflows/ci.yml

+++ b/.github/workflows/ci.yml

@@ -7,7 +7,7 @@ jobs:

test-ubuntu-latest:

runs-on: ubuntu-latest

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get update

@@ -17,7 +17,7 @@ jobs:

run: ./utils/gen-test-certs.sh

- name: test-tls

run: |

- sudo apt-get -y install tcl8.5 tcl-tls

+ sudo apt-get -y install tcl tcl-tls

./runtest --clients 2 --verbose --tls

- name: cluster-test

run: |

@@ -42,14 +42,14 @@ jobs:

build-macos-latest:

runs-on: macos-latest

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: make -j2

build-libc-malloc:

runs-on: ubuntu-latest

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get update

diff --git a/.github/workflows/daily.yml b/.github/workflows/daily.yml

index 3c10236b4..6e4f88ef3 100644

--- a/.github/workflows/daily.yml

+++ b/.github/workflows/daily.yml

@@ -1,16 +1,21 @@

name: Daily

on:

+ pull_request:

+ branches:

+ # any PR to a release branch.

+ - '[0-9].[0-9]'

schedule:

- - cron: '0 7 * * *'

+ - cron: '0 0 * * *'

jobs:

- test-jemalloc:

+ test-ubuntu-jemalloc:

runs-on: ubuntu-latest

- timeout-minutes: 1200

+ if: github.repository == 'redis/redis'

+ timeout-minutes: 14400

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get -y install uuid-dev libcurl4-openssl-dev

@@ -21,12 +26,17 @@ jobs:

./runtest --accurate --verbose

- name: module api test

run: ./runtest-moduleapi --verbose

+ - name: sentinel tests

+ run: ./runtest-sentinel

+ - name: cluster tests

+ run: ./runtest-cluster

- test-libc-malloc:

+ test-ubuntu-libc-malloc:

runs-on: ubuntu-latest

- timeout-minutes: 1200

+ if: github.repository == 'redis/redis'

+ timeout-minutes: 14400

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get -y install uuid-dev libcurl4-openssl-dev

@@ -37,11 +47,17 @@ jobs:

./runtest --accurate --verbose

- name: module api test

run: ./runtest-moduleapi --verbose

+ - name: sentinel tests

+ run: ./runtest-sentinel

+ - name: cluster tests

+ run: ./runtest-cluster

test:

runs-on: ubuntu-latest

+ if: github.repository == 'redis/redis'

+ timeout-minutes: 14400

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get -y install uuid-dev libcurl4-openssl-dev

@@ -59,7 +75,7 @@ jobs:

test-ubuntu-arm:

runs-on: [self-hosted, linux, arm]

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get -y install uuid-dev libcurl4-openssl-dev

@@ -74,16 +90,91 @@ jobs:

test-valgrind:

runs-on: ubuntu-latest

+ if: github.repository == 'redis/redis'

timeout-minutes: 14400

steps:

- - uses: actions/checkout@v1

+ - uses: actions/checkout@v2

- name: make

run: |

sudo apt-get -y install uuid-dev libcurl4-openssl-dev

make valgrind

- name: test

run: |

+ sudo apt-get update

sudo apt-get install tcl8.5 valgrind -y

./runtest --valgrind --verbose --clients 1

- name: module api test

run: ./runtest-moduleapi --valgrind --verbose --clients 1

+

+ test-centos7-jemalloc:

+ runs-on: ubuntu-latest

+ if: github.repository == 'redis/redis'

+ container: centos:7

+ timeout-minutes: 14400

+ steps:

+ - uses: actions/checkout@v2

+ - name: make

+ run: |

+ yum -y install centos-release-scl

+ yum -y install devtoolset-7

+ scl enable devtoolset-7 "make"

+ - name: test

+ run: |

+ yum -y install tcl

+ ./runtest --accurate --verbose

+ - name: module api test

+ run: ./runtest-moduleapi --verbose

+ - name: sentinel tests

+ run: ./runtest-sentinel

+ - name: cluster tests

+ run: ./runtest-cluster

+

+ test-centos7-tls:

+ runs-on: ubuntu-latest

+ if: github.repository == 'redis/redis'

+ container: centos:7

+ timeout-minutes: 14400

+ steps:

+ - uses: actions/checkout@v2

+ - name: make

+ run: |

+ yum -y install centos-release-scl epel-release

+ yum -y install devtoolset-7 openssl-devel openssl

+ scl enable devtoolset-7 "make BUILD_TLS=yes"

+ - name: test

+ run: |

+ yum -y install tcl tcltls

+ ./utils/gen-test-certs.sh

+ ./runtest --accurate --verbose --tls

+ ./runtest --accurate --verbose

+ - name: module api test

+ run: |

+ ./runtest-moduleapi --verbose --tls

+ ./runtest-moduleapi --verbose

+ - name: sentinel tests

+ run: |

+ ./runtest-sentinel --tls

+ ./runtest-sentinel

+ - name: cluster tests

+ run: |

+ ./runtest-cluster --tls

+ ./runtest-cluster

+

+ test-macos-latest:

+ runs-on: macos-latest

+ if: github.repository == 'redis/redis'

+ timeout-minutes: 14400

+ steps:

+ - uses: actions/checkout@v2

+ - name: make

+ run: make

+ - name: test

+ run: |

+ ./runtest --accurate --verbose --no-latency

+ - name: module api test

+ run: ./runtest-moduleapi --verbose

+ - name: sentinel tests

+ run: ./runtest-sentinel

+ - name: cluster tests

+ run: ./runtest-cluster

+

diff --git a/.gitignore b/.gitignore

index c169eca17..21f903288 100644

--- a/.gitignore

+++ b/.gitignore

@@ -48,9 +48,13 @@ src/nodes.conf

deps/lua/src/lua

deps/lua/src/luac

deps/lua/src/liblua.a

+tests/tls/*

.make-*

.prerequisites

*.dSYM

Makefile.dep

.vscode/*

.idea/*

+.ccls

+.ccls-cache/*

+compile_commands.json

diff --git a/00-RELEASENOTES b/00-RELEASENOTES

index c6ee44246..bff270e77 100644

--- a/00-RELEASENOTES

+++ b/00-RELEASENOTES

@@ -11,6 +11,1139 @@ CRITICAL: There is a critical bug affecting MOST USERS. Upgrade ASAP.

SECURITY: There are security fixes in the release.

--------------------------------------------------------------------------------

+================================================================================

+Redis 6.0.10 Released Tue Jan 12 16:20:20 IST 2021

+================================================================================

+

+Upgrade urgency MODERATE: several bugs with moderate impact are fixed,

+Here is a comprehensive list of changes in this release compared to 6.0.9.

+

+Command behavior changes:

+* SWAPDB invalidates WATCHed keys (#8239)

+* SORT command behaves differently when used on a writable replica (#8283)

+* EXISTS should not alter LRU (#8016)

+ In Redis 5.0 and 6.0 it would have touched the LRU/LFU of the key.

+* OBJECT should not reveal logically expired keys (#8016)

+ Will now behave the same TYPE or any other non-DEBUG command.

+* GEORADIUS[BYMEMBER] can fail with -OOM if Redis is over the memory limit (#8107)

+

+Other behavior changes:

+* Sentinel: Fix missing updates to the config file after SENTINEL SET command (#8229)

+* CONFIG REWRITE is atomic and safer, but requires write access to the config file's folder (#7824, #8051)

+ This change was already present in 6.0.9, but was missing from the release notes.

+

+Bug fixes with compatibility implications (bugs introduced in Redis 6.0):

+* Fix RDB CRC64 checksum on big-endian systems (#8270)

+ If you're using big-endian please consider the compatibility implications with

+ RESTORE, replication and persistence.

+* Fix wrong order of key/value in Lua's map response (#8266)

+ If your scripts use redis.setresp() or return a map (new in Redis 6.0), please

+ consider the implications.

+

+Bug fixes:

+* Fix an issue where a forked process deletes the parent's pidfile (#8231)

+* Fix crashes when enabling io-threads-do-reads (#8230)

+* Fix a crash in redis-cli after executing cluster backup (#8267)

+* Handle output buffer limits for module blocked clients (#8141)

+ Could result in a module sending reply to a blocked client to go beyond the limit.

+* Fix setproctitle related crashes. (#8150, #8088)

+ Caused various crashes on startup, mainly on Apple M1 chips or under instrumentation.

+* Backup/restore cluster mode keys to slots map for repl-diskless-load=swapdb (#8108)

+ In cluster mode with repl-diskless-load, when loading failed, slot map wouldn't

+ have been restored.

+* Fix oom-score-adj-values range, and bug when used in config file (#8046)

+ Enabling setting this in the config file in a line after enabling it, would

+ have been buggy.

+* Reset average ttl when empty databases (#8106)

+ Just causing misleading metric in INFO

+* Disable rehash when Redis has child process (#8007)

+ This could have caused excessive CoW during BGSAVE, replication or AOFRW.

+* Further improved ACL algorithm for picking categories (#7966)

+ Output of ACL GETUSER is now more similar to the one provided by ACL SETUSER.

+* Fix bug with module GIL being released prematurely (#8061)

+ Could in theory (and rarely) cause multi-threaded modules to corrupt memory.

+* Reduce effect of client tracking causing feedback loop in key eviction (#8100)

+* Fix cluster access to unaligned memory (SIGBUS on old ARM) (#7958)

+* Fix saving of strings larger than 2GB into RDB files (#8306)

+

+Additional improvements:

+* Avoid wasteful transient memory allocation in certain cases (#8286, #5954)

+

+Platform / toolchain support related improvements:

+* Fix crash log registers output on ARM. (#8020)

+* Add a check for an ARM64 Linux kernel bug (#8224)

+ Due to the potential severity of this issue, Redis will print log warning on startup.

+* Raspberry build fix. (#8095)

+

+New configuration options:

+* oom-score-adj-values config can now take absolute values (besides relative ones) (#8046)

+

+Module related fixes:

+* Moved RMAPI_FUNC_SUPPORTED so that it's usable (#8037)

+* Improve timer accuracy (#7987)

+* Allow '\0' inside of result of RM_CreateStringPrintf (#6260)

+

+================================================================================

+Redis 6.0.9 Released Mon Oct 26 10:37:47 IST 2020

+================================================================================

+

+Upgrade urgency: SECURITY if you use an affected platform (see below).

+ Otherwise the upgrade urgency is MODERATE.

+

+This release fixes a potential heap overflow when using a heap allocator other

+than jemalloc or glibc's malloc. See:

+https://github.com/redis/redis/pull/7963

+

+Other fixes in this release:

+

+New:

+* Memory reporting of clients argv (#7874)

+* Add redis-cli control on raw format line delimiter (#7841)

+* Add redis-cli support for rediss:// -u prefix (#7900)

+* Get rss size support for NetBSD and DragonFlyBSD

+

+Behavior changes:

+* WATCH no longer ignores keys which have expired for MULTI/EXEC (#7920)

+* Correct OBJECT ENCODING response for stream type (#7797)

+* Allow blocked XREAD on a cluster replica (#7881)

+* TLS: Do not require CA config if not used (#7862)

+

+Bug fixes:

+* INFO report real peak memory (before eviction) (#7894)

+* Allow requirepass config to clear the password (#7899)

+* Fix config rewrite file handling to make it really atomic (#7824)

+* Fix excessive categories being displayed from ACLs (#7889)

+* Add fsync in replica when full RDB payload was received (#7839)

+* Don't write replies to socket when output buffer limit reached (#7202)

+* Fix redis-check-rdb support for modules aux data (#7826)

+* Other smaller bug fixes

+

+Modules API:

+* Add APIs for version and compatibility checks (#7865)

+* Add RM_GetClientCertificate (#7866)

+* Add RM_GetDetachedThreadSafeContext (#7886)

+* Add RM_GetCommandKeys (#7884)

+* Add Swapdb Module Event (#7804)

+* RM_GetContextFlags provides indication of being in a fork child (#7783)

+* RM_GetContextFlags document missing flags: MULTI_DIRTY, IS_CHILD (#7821)

+* Expose real client on connection events (#7867)

+* Minor improvements to module blocked on keys (#7903)

+

+Full list of commits:

+

+Yossi Gottlieb in commit ce0d74d8f:

+ Fix wrong zmalloc_size() assumption. (#7963)

+ 1 file changed, 3 deletions(-)

+

+Oran Agra in commit d3ef26822:

+ Attempt to fix sporadic test failures due to wait_for_log_messages (#7955)

+ 1 file changed, 2 insertions(+)

+

+David CARLIER in commit 76993a0d4:

+ cpu affinity: DragonFlyBSD support (#7956)

+ 2 files changed, 9 insertions(+), 2 deletions(-)

+

+Zach Fewtrell in commit b23cdc14a:

+ fix invalid 'failover' identifier in cluster slave selection test (#7942)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+WuYunlong in commit 99a4cb401:

+ Update rdb_last_bgsave_time_sec in INFO on diskless replication (#7917)

+ 1 file changed, 11 insertions(+), 14 deletions(-)

+

+Wen Hui in commit 258287c35:

+ do not add save parameter during config rewrite in sentinel mode (#7945)

+ 1 file changed, 6 insertions(+)

+

+Qu Chen in commit 6134279e2:

+ WATCH no longer ignores keys which have expired for MULTI/EXEC. (#7920)

+ 2 files changed, 3 insertions(+), 3 deletions(-)

+

+Oran Agra in commit d15ec67c6:

+ improve verbose logging on failed test. print log file lines (#7938)

+ 1 file changed, 4 insertions(+)

+

+Yossi Gottlieb in commit 8a2e6d24f:

+ Add a --no-latency tests flag. (#7939)

+ 5 files changed, 23 insertions(+), 9 deletions(-)

+

+filipe oliveira in commit 0a1737dc5:

+ Fixed bug concerning redis-benchmark non clustered benchmark forcing always the same hash tag {tag} (#7931)

+ 1 file changed, 31 insertions(+), 24 deletions(-)

+

+Oran Agra in commit 6d9b3df71:

+ fix 32bit build warnings (#7926)

+ 2 files changed, 3 insertions(+), 3 deletions(-)

+

+Wen Hui in commit ed6f7a55e:

+ fix double fclose in aofrewrite (#7919)

+ 1 file changed, 6 insertions(+), 5 deletions(-)

+

+Oran Agra in commit 331d73c92:

+ INFO report peak memory before eviction (#7894)

+ 1 file changed, 11 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit e88e13528:

+ Fix tests failure on busybox systems. (#7916)

+ 2 files changed, 2 insertions(+), 2 deletions(-)

+

+Oran Agra in commit b7f53738e:

+ Allow requirepass config to clear the password (#7899)

+ 1 file changed, 18 insertions(+), 8 deletions(-)

+

+Wang Yuan in commit 2ecb28b68:

+ Remove temporary aof and rdb files in a background thread (#7905)

+ 2 files changed, 3 insertions(+), 3 deletions(-)

+

+guybe7 in commit 7bc605e6b:

+ Minor improvements to module blocked on keys (#7903)

+ 3 files changed, 15 insertions(+), 9 deletions(-)

+

+Andreas Lind in commit 1b484608d:

+ Support redis-cli -u rediss://... (#7900)

+ 1 file changed, 9 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit 95095d680:

+ Modules: fix RM_GetCommandKeys API. (#7901)

+ 3 files changed, 4 insertions(+), 7 deletions(-)

+

+Meir Shpilraien (Spielrein) in commit cd3ae2f2c:

+ Add Module API for version and compatibility checks (#7865)

+ 9 files changed, 180 insertions(+), 3 deletions(-)

+

+Yossi Gottlieb in commit 1d723f734:

+ Module API: Add RM_GetClientCertificate(). (#7866)

+ 6 files changed, 88 insertions(+)

+

+Yossi Gottlieb in commit d72172752:

+ Modules: Add RM_GetDetachedThreadSafeContext(). (#7886)

+ 4 files changed, 52 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit e4f9aff19:

+ Modules: add RM_GetCommandKeys().

+ 6 files changed, 238 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit 6682b913e:

+ Introduce getKeysResult for getKeysFromCommand.

+ 7 files changed, 170 insertions(+), 121 deletions(-)

+

+Madelyn Olson in commit 9db65919c:

+ Fixed excessive categories being displayed from acls (#7889)

+ 2 files changed, 29 insertions(+), 2 deletions(-)

+

+Oran Agra in commit f34c50cf6:

+ Add some additional signal info to the crash log (#7891)

+ 1 file changed, 4 insertions(+), 1 deletion(-)

+

+Oran Agra in commit 300bb4701:

+ Allow blocked XREAD on a cluster replica (#7881)

+ 3 files changed, 43 insertions(+)

+

+Oran Agra in commit bc5cf0f1a:

+ memory reporting of clients argv (#7874)

+ 5 files changed, 55 insertions(+), 5 deletions(-)

+

+DvirDukhan in commit 13d2e6a57:

+ redis-cli add control on raw format line delimiter (#7841)

+ 1 file changed, 8 insertions(+), 6 deletions(-)

+

+Oran Agra in commit d54e25620:

+ Include internal sds fragmentation in MEMORY reporting (#7864)

+ 2 files changed, 7 insertions(+), 7 deletions(-)

+

+Oran Agra in commit ac2c2b74e:

+ Fix crash in script timeout during AOF loading (#7870)

+ 2 files changed, 47 insertions(+), 4 deletions(-)

+

+Rafi Einstein in commit 00d2082e7:

+ Makefile: enable program suffixes via PROG_SUFFIX (#7868)

+ 2 files changed, 10 insertions(+), 6 deletions(-)

+

+nitaicaro in commit d2c2c26e7:

+ Fixed Tracking test “The other connection is able to get invalidations” (#7871)

+ 1 file changed, 3 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit 2c172556f:

+ Modules: expose real client on conn events.

+ 1 file changed, 11 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit 2972d0c1f:

+ Module API: Fail ineffective auth calls.

+ 1 file changed, 5 insertions(+)

+

+Yossi Gottlieb in commit aeb2a3b6a:

+ TLS: Do not require CA config if not used. (#7862)

+ 1 file changed, 5 insertions(+), 3 deletions(-)

+

+Oran Agra in commit d8e64aeb8:

+ warning: comparison between signed and unsigned integer in 32bit build (#7838)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+David CARLIER in commit 151209982:

+ Add support for Haiku OS (#7435)

+ 3 files changed, 16 insertions(+)

+

+Gavrie Philipson in commit b1d3e169f:

+ Fix typo in module API docs (#7861)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+David CARLIER in commit 08e3b8d13:

+ getting rss size implementation for netbsd (#7293)

+ 1 file changed, 20 insertions(+)

+

+Oran Agra in commit 0377a889b:

+ Fix new obuf-limits tests to work with TLS (#7848)

+ 2 files changed, 29 insertions(+), 13 deletions(-)

+

+caozb in commit a057ad9b1:

+ ignore slaveof no one in redis.conf (#7842)

+ 1 file changed, 10 insertions(+), 1 deletion(-)

+

+Wang Yuan in commit 87ecee645:

+ Don't support Gopher if enable io threads to read queries (#7851)

+ 2 files changed, 8 insertions(+), 5 deletions(-)

+

+Wang Yuan in commit b92902236:

+ Set 'loading' and 'shutdown_asap' to volatile sig_atomic_t type (#7845)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Uri Shachar in commit ee0875a02:

+ Fix config rewrite file handling to make it really atomic (#7824)

+ 1 file changed, 49 insertions(+), 47 deletions(-)

+

+WuYunlong in commit d577519e1:

+ Add fsync to readSyncBulkPayload(). (#7839)

+ 1 file changed, 11 insertions(+)

+

+Wen Hui in commit 104e0ea3e:

+ rdb.c: handle fclose error case differently to avoid double fclose (#7307)

+ 1 file changed, 7 insertions(+), 6 deletions(-)

+

+Wang Yuan in commit 0eb015ac6:

+ Don't write replies if close the client ASAP (#7202)

+ 7 files changed, 144 insertions(+), 2 deletions(-)

+

+Guy Korland in commit 08a03e32c:

+ Fix RedisModule_HashGet examples (#6697)

+ 1 file changed, 4 insertions(+), 4 deletions(-)

+

+Oran Agra in commit 09551645d:

+ fix recently broken TLS build error, and add coverage for CI (#7833)

+ 2 files changed, 4 insertions(+), 3 deletions(-)

+

+David CARLIER in commit c545ba5d0:

+ Further NetBSD update and build fixes. (#7831)

+ 3 files changed, 72 insertions(+), 3 deletions(-)

+

+WuYunlong in commit ec9050053:

+ Fix redundancy use of semicolon in do-while macros in ziplist.c. (#7832)

+ 1 file changed, 3 insertions(+), 3 deletions(-)

+

+yixiang in commit 27a4d1314:

+ Fix connGetSocketError usage (#7811)

+ 2 files changed, 6 insertions(+), 4 deletions(-)

+

+Oran Agra in commit 30795dcae:

+ RM_GetContextFlags - document missing flags (#7821)

+ 1 file changed, 6 insertions(+)

+

+Yossi Gottlieb in commit 14a12849f:

+ Fix occasional hangs on replication reconnection. (#7830)

+ 2 files changed, 14 insertions(+), 3 deletions(-)

+

+Ariel Shtul in commit d5a1b06dc:

+ Fix redis-check-rdb support for modules aux data (#7826)

+ 3 files changed, 21 insertions(+), 1 deletion(-)

+

+Wen Hui in commit 39f793693:

+ refactor rewriteStreamObject code for adding missing streamIteratorStop call (#7829)

+ 1 file changed, 36 insertions(+), 18 deletions(-)

+

+WuYunlong in commit faad29bfb:

+ Make IO threads killable so that they can be canceled at any time.

+ 1 file changed, 1 insertion(+)

+

+WuYunlong in commit b3f1b5830:

+ Make main thread killable so that it can be canceled at any time. Refine comment of makeThreadKillable().

+ 3 files changed, 11 insertions(+), 4 deletions(-)

+

+Oran Agra in commit 0f43d1f55:

+ RM_GetContextFlags provides indication that we're in a fork child (#7783)

+ 8 files changed, 28 insertions(+), 18 deletions(-)

+

+Wen Hui in commit a55ea9cdf:

+ Add Swapdb Module Event (#7804)

+ 5 files changed, 52 insertions(+)

+

+Daniel Dai in commit 1d8f72bef:

+ fix make warnings in debug.c MacOS (#7805)

+ 2 files changed, 3 insertions(+), 2 deletions(-)

+

+David CARLIER in commit 556953d93:

+ debug.c: NetBSD build warning fix. (#7810)

+ 1 file changed, 4 insertions(+), 3 deletions(-)

+

+Wang Yuan in commit d02435b66:

+ Remove tmp rdb file in background thread (#7762)

+ 6 files changed, 82 insertions(+), 8 deletions(-)

+

+Oran Agra in commit 1bd7bfdc0:

+ Add printf attribute and fix warnings and a minor bug (#7803)

+ 2 files changed, 12 insertions(+), 4 deletions(-)

+

+WuYunlong in commit d25147b4c:

+ bio: doFastMemoryTest should try to kill io threads as well.

+ 3 files changed, 19 insertions(+)

+

+WuYunlong in commit 4489ba081:

+ bio: fix doFastMemoryTest.

+ 4 files changed, 25 insertions(+), 3 deletions(-)

+

+Wen Hui in commit cf85def67:

+ correct OBJECT ENCODING response for stream type (#7797)

+ 1 file changed, 1 insertion(+)

+

+WuYunlong in commit cf5bcf892:

+ Clarify help text of tcl scripts. (#7798)

+ 1 file changed, 1 insertion(+)

+

+Mykhailo Pylyp in commit f72665c65:

+ Recalculate hardcoded variables from $::instances_count in sentinel tests (#7561)

+ 3 files changed, 15 insertions(+), 13 deletions(-)

+

+Oran Agra in commit c67b19e7a:

+ Fix failing valgrind installation in github actions (#7792)

+ 1 file changed, 1 insertion(+)

+

+Oran Agra in commit 92763fd2a:

+ fix broken PEXPIREAT test (#7791)

+ 1 file changed, 10 insertions(+), 6 deletions(-)

+

+Wang Yuan in commit f5b4c0ccb:

+ Remove dead global variable 'lru_clock' (#7782)

+ 1 file changed, 1 deletion(-)

+

+Oran Agra in commit 82d431fd6:

+ Squash merging 125 typo/grammar/comment/doc PRs (#7773)

+ 80 files changed, 436 insertions(+), 416 deletions(-)

+

+================================================================================

+Redis 6.0.8 Released Wed Sep 09 23:34:17 IDT 2020

+================================================================================

+

+Upgrade urgency HIGH: Anyone who's using Redis 6.0.7 with Sentinel or

+CONFIG REWRITE command is affected and should upgrade ASAP, see #7760.

+

+Bug fixes:

+

+* CONFIG REWRITE after setting oom-score-adj-values either via CONFIG SET or

+ loading it from a config file, will generate a corrupt config file that will

+ cause Redis to fail to start

+* Fix issue with redis-cli --pipe on MacOS

+* Fix RESP3 response for HKEYS/HVALS on non-existing key

+* Various small bug fixes

+

+New features / Changes:

+

+* Remove THP warning when set to madvise

+* Allow EXEC with read commands on readonly replica in cluster

+* Add masters/replicas options to redis-cli --cluster call command

+

+Module API:

+

+* Add RedisModule_ThreadSafeContextTryLock

+

+Full list of commits:

+

+Oran Agra in commit cdabf696a:

+ Fix RESP3 response for HKEYS/HVALS on non-existing key

+ 1 file changed, 3 insertions(+), 1 deletion(-)

+

+Oran Agra in commit ec633c716:

+ Fix leak in new blockedclient module API test

+ 1 file changed, 3 insertions(+)

+

+Yossi Gottlieb in commit 6bac07c5c:

+ Tests: fix oom-score-adj false positives. (#7772)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+杨博东 in commit 6043dc614:

+ Tests: Add aclfile load and save tests (#7765)

+ 2 files changed, 41 insertions(+)

+

+Roi Lipman in commit c0b5f9bf0:

+ RM_ThreadSafeContextTryLock a non-blocking method for acquiring GIL (#7738)

+ 7 files changed, 122 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit 5780a1599:

+ Tests: validate CONFIG REWRITE for all params. (#7764)

+ 6 files changed, 43 insertions(+), 6 deletions(-)

+

+Oran Agra in commit e3c14b25d:

+ Change THP warning to use madvise rather than never (#7771)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Itamar Haber in commit 28929917b:

+ Documents RM_Call's fmt (#5448)

+ 1 file changed, 25 insertions(+)

+

+Jan-Erik Rediger in commit 9146402c2:

+ Check that THP is not set to always (madvise is ok) (#4001)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Yossi Gottlieb in commit d05089429:

+ Tests: clean up stale .cli files. (#7768)

+ 1 file changed, 2 insertions(+)

+

+Eran Liberty in commit 8861c1bae:

+ Allow exec with read commands on readonly replica in cluster (#7766)

+ 3 files changed, 59 insertions(+), 3 deletions(-)

+

+Yossi Gottlieb in commit 2cf2ff2f6:

+ Fix CONFIG REWRITE of oom-score-adj-values. (#7761)

+ 1 file changed, 2 insertions(+), 1 deletion(-)

+

+Oran Agra in commit 1386c80f7:

+ handle cur_test for nested tests

+ 1 file changed, 3 insertions(+)

+

+Oran Agra in commit c7d4945f0:

+ Add daily CI for MacOS (#7759)

+ 1 file changed, 18 insertions(+)

+

+bodong.ybd in commit 32548264c:

+ Tests: Some fixes for macOS

+ 3 files changed, 26 insertions(+), 11 deletions(-)

+

+Oran Agra in commit 1e17f9812:

+ Fix cluster consistency-check test (#7754)

+ 1 file changed, 55 insertions(+), 29 deletions(-)

+

+Yossi Gottlieb in commit f4ecdf86a:

+ Tests: fix unmonitored servers. (#7756)

+ 1 file changed, 5 insertions(+)

+

+Oran Agra in commit 9f020050d:

+ fix broken cluster/sentinel tests by recent commit (#7752)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Oran Agra in commit fdbabb496:

+ Improve valgrind support for cluster tests (#7725)

+ 3 files changed, 83 insertions(+), 23 deletions(-)

+

+Oran Agra in commit 35a6a0bbc:

+ test infra - add durable mode to work around test suite crashing

+ 3 files changed, 35 insertions(+), 3 deletions(-)

+

+Oran Agra in commit e3136b13f:

+ test infra - wait_done_loading

+ 2 files changed, 16 insertions(+), 36 deletions(-)

+

+Oran Agra in commit 83c75dbd9:

+ test infra - flushall between tests in external mode

+ 1 file changed, 1 insertion(+)

+

+Oran Agra in commit 265f5d3cf:

+ test infra - improve test skipping ability

+ 3 files changed, 91 insertions(+), 36 deletions(-)

+

+Oran Agra in commit fcd3a9908:

+ test infra - reduce disk space usage

+ 3 files changed, 33 insertions(+), 11 deletions(-)

+

+Oran Agra in commit b6ea4699f:

+ test infra - write test name to logfile

+ 3 files changed, 35 insertions(+)

+

+Yossi Gottlieb in commit 4a4b07fc6:

+ redis-cli: fix writeConn() buffer handling. (#7749)

+ 1 file changed, 37 insertions(+), 6 deletions(-)

+

+Oran Agra in commit f2d08de2e:

+ Print server startup messages after daemonization (#7743)

+ 1 file changed, 4 insertions(+), 4 deletions(-)

+

+Thandayuthapani in commit 77541d555:

+ Add masters/replicas options to redis-cli --cluster call command (#6491)

+ 1 file changed, 13 insertions(+), 2 deletions(-)

+

+Oran Agra in commit 91d13a854:

+ fix README about BUILD_WITH_SYSTEMD usage (#7739)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Yossi Gottlieb in commit 88d03d965:

+ Fix double-make issue with make && make install. (#7734)

+ 1 file changed, 2 insertions(+)

+

+================================================================================

+Redis 6.0.7 Released Fri Aug 28 11:05:09 IDT 2020

+================================================================================

+

+Upgrade urgency MODERATE: several bugs with moderate impact are fixed,

+Specifically the first two listed below which cause protocol errors for clients.

+

+Bug fixes:

+

+* CONFIG SET could hung the client when arrives during RDB/ROF loading (When

+ processed after another command that was also rejected with -LOADING error)

+* LPOS command when RANK is greater than matches responded wiht broken protocol

+ (negative multi-bulk count)

+* UNLINK / Lazyfree for stream type key would have never do async freeing

+* PERSIST should invalidate WATCH (Like EXPIRE does)

+* EXEC with only read commands could have be rejected when OOM

+* TLS: relax verification on CONFIG SET (Don't error if some configs are set

+ and tls isn't enabled)

+* TLS: support cluster/replication without tls-port

+* Systemd startup after network is online

+* Redis-benchmark improvements

+* Various small bug fixes

+

+New features:

+

+* Add oom-score-adj configuration option to control Linux OOM killer

+* Show IO threads statistics and status in INFO output

+* Add optional tls verification mode (see tls-auth-clients)

+

+Module API:

+

+* Add RedisModule_HoldString

+* Add loaded keyspace event

+* Fix RedisModuleEvent_LoadingProgress

+* Fix RedisModuleEvent_MasterLinkChange hook missing on successful psync

+* Fix missing RM_CLIENTINFO_FLAG_SSL

+* Refactor redismodule.h for use with -fno-common / extern

+

+Full list of commits:

+

+Oran Agra in commit c26394e4f:

+ Reduce the probability of failure when start redis in runtest-cluster #7554 (#7635)

+ 1 file changed, 23 insertions(+), 5 deletions(-)

+

+Leoš Literák in commit 745d5e802:

+ Update README.md with instructions how to build with systemd support (#7730)

+ 1 file changed, 5 insertions(+)

+

+Yossi Gottlieb in commit 03f1d208a:

+ Fix oom-score-adj on older distros. (#7724)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit 941174d9c:

+ Backport Lua 5.2.2 stack overflow fix. (#7733)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Wang Yuan in commit c897dba14:

+ Fix wrong format specifiers of 'sdscatfmt' for the INFO command (#7706)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Wen Hui in commit 5e3fab5e7:

+ fix make warnings (#7692)

+ 1 file changed, 4 insertions(+), 3 deletions(-)

+

+Nathan Scott in commit a2b09c13f:

+ Annotate module API functions in redismodule.h for use with -fno-common (#6900)

+ 1 file changed, 265 insertions(+), 241 deletions(-)

+

+Yossi Gottlieb in commit bf244273f:

+ Add oom-score-adj configuration option to control Linux OOM killer. (#1690)

+ 8 files changed, 306 insertions(+), 1 deletion(-)

+

+Meir Shpilraien (Spielrein) in commit b5a6ab98f:

+ see #7544, added RedisModule_HoldString api. (#7577)

+ 4 files changed, 83 insertions(+), 8 deletions(-)

+

+ShooterIT in commit ff04cf62b:

+ [Redis-benchmark] Remove zrem test, add zpopmin test

+ 1 file changed, 5 insertions(+), 5 deletions(-)

+

+ShooterIT in commit 0f3260f31:

+ [Redis-benchmark] Support zset type

+ 1 file changed, 16 insertions(+)

+

+Arun Ranganathan in commit 45d0b94fc:

+ Show threading configuration in INFO output (#7446)

+ 3 files changed, 46 insertions(+), 14 deletions(-)

+

+Meir Shpilraien (Spielrein) in commit a22f61e12:

+ This PR introduces a new loaded keyspace event (#7536)

+ 8 files changed, 135 insertions(+), 4 deletions(-)

+

+Oran Agra in commit 1c9ca1030:

+ Fix rejectCommand trims newline in shared error objects, hung clients (#7714)

+ 4 files changed, 42 insertions(+), 23 deletions(-)

+

+valentinogeron in commit 217471795:

+ EXEC with only read commands should not be rejected when OOM (#7696)

+ 2 files changed, 51 insertions(+), 8 deletions(-)

+

+Itamar Haber in commit 6e6c47d16:

+ Expands lazyfree's effort estimate to include Streams (#5794)

+ 1 file changed, 24 insertions(+)

+

+Yossi Gottlieb in commit da6813623:

+ Add language servers stuff, test/tls to gitignore. (#7698)

+ 1 file changed, 4 insertions(+)

+

+Valentino Geron in commit de7fb126e:

+ Assert that setDeferredAggregateLen isn't called with negative value

+ 1 file changed, 1 insertion(+)

+

+Valentino Geron in commit 6cf27f25f:

+ Fix LPOS command when RANK is greater than matches

+ 2 files changed, 9 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit 9bba54ace:

+ Tests: fix redis-cli with remote hosts. (#7693)

+ 3 files changed, 5 insertions(+), 5 deletions(-)

+

+huangzhw in commit 0fec2cb81:

+ RedisModuleEvent_LoadingProgress always at 100% progress (#7685)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+guybe7 in commit 931e19aa6:

+ Modules: Invalidate saved_oparray after use (#7688)

+ 1 file changed, 2 insertions(+)

+

+杨博东 in commit 6f2065570:

+ Fix flock cluster config may cause failure to restart after kill -9 (#7674)

+ 4 files changed, 31 insertions(+), 7 deletions(-)

+

+Raghav Muddur in commit 200149a2a:

+ Update clusterMsgDataPublish to clusterMsgModule (#7682)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Madelyn Olson in commit 72daa1b4e:

+ Fixed hset error since it's shared with hmset (#7678)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+guybe7 in commit 3bf9ac994:

+ PERSIST should signalModifiedKey (Like EXPIRE does) (#7671)

+ 1 file changed, 1 insertion(+)

+

+Oran Agra in commit b37501684:

+ OOM Crash log include size of allocation attempt. (#7670)

+ 1 file changed, 2 insertions(+), 1 deletion(-)

+

+Wen Hui in commit 2136cb68f:

+ [module] using predefined REDISMODULE_NO_EXPIRE in RM_GetExpire (#7669)

+ 1 file changed, 2 insertions(+), 1 deletion(-)

+

+Oran Agra in commit f56aee4bc:

+ Trim trailing spaces in error replies coming from rejectCommand (#7668)

+ 1 file changed, 5 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit 012d7506a:

+ Module API: fix missing RM_CLIENTINFO_FLAG_SSL. (#7666)

+ 6 files changed, 82 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit a0adbc857:

+ TLS: relax verification on CONFIG SET. (#7665)

+ 2 files changed, 24 insertions(+), 7 deletions(-)

+

+Madelyn Olson in commit 2ef29715b:

+ Fixed timer warning (#5953)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Wagner Francisco Mezaroba in commit b76f171f5:

+ allow --pattern to be used along with --bigkeys (#3586)

+ 1 file changed, 9 insertions(+), 2 deletions(-)

+

+zhaozhao.zz in commit cc7b57765:

+ redis-benchmark: fix wrong random key for hset (#4895)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+zhaozhao.zz in commit 479c1ba77:

+ CLIENT_MASTER should ignore server.proto_max_bulk_len

+ 1 file changed, 2 insertions(+), 1 deletion(-)

+

+zhaozhao.zz in commit f61ce8a52:

+ config: proto-max-bulk-len must be 1mb or greater

+ 2 files changed, 2 insertions(+), 2 deletions(-)

+

+zhaozhao.zz in commit 0350f597a:

+ using proto-max-bulk-len in checkStringLength for SETRANGE and APPEND

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+YoongHM in commit eea63548d:

+ Start redis after network is online (#7639)

+ 1 file changed, 2 insertions(+)

+

+Yossi Gottlieb in commit aef6d74fb:

+ Run daily workflow on main repo only (no forks). (#7646)

+ 1 file changed, 7 insertions(+)

+

+WuYunlong in commit 917b4d241:

+ see #7250, fix signature of RedisModule_DeauthenticateAndCloseClient (#7645)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Wang Yuan in commit efab7fd54:

+ Print error info if failed opening config file (#6943)

+ 1 file changed, 2 insertions(+), 1 deletion(-)

+

+Wen Hui in commit 8c4468bcf:

+ fix memory leak in ACLLoadFromFile error handling (#7623)

+ 1 file changed, 1 insertion(+)

+

+Oran Agra in commit 89724e1d2:

+ redis-cli --cluster-yes - negate force flag for clarity

+ 1 file changed, 9 insertions(+), 9 deletions(-)

+

+Frank Meier in commit c813739af:

+ reintroduce REDISCLI_CLUSTER_YES env variable in redis-cli

+ 1 file changed, 6 insertions(+)

+

+Frank Meier in commit 7e3b86c18:

+ add force option to 'create-cluster create' script call (#7612)

+ 1 file changed, 6 insertions(+), 2 deletions(-)

+

+Oran Agra in commit 3f7fa4312:

+ fix new rdb test failing on timing issues (#7604)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit 417976d7a:

+ Fix test-centos7-tls daily job. (#7598)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Oran Agra in commit c41818c51:

+ module hook for master link up missing on successful psync (#7584)

+ 2 files changed, 22 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit 6ef3fc185:

+ CI: Add daily CentOS 7.x jobs. (#7582)

+ 1 file changed, 50 insertions(+), 4 deletions(-)

+

+WuYunlong in commit 002c37482:

+ Fix running single test 14-consistency-check.tcl (#7587)

+ 1 file changed, 1 insertion(+)

+

+Yossi Gottlieb in commit 66cbbb6ad:

+ Clarify RM_BlockClient() error condition. (#6093)

+ 1 file changed, 9 insertions(+)

+

+namtsui in commit 22aba2207:

+ Avoid an out-of-bounds read in the redis-sentinel (#7443)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Wen Hui in commit af08887dc:

+ Add SignalModifiedKey hook in XGROUP CREATE with MKSTREAM option (#7562)

+ 1 file changed, 1 insertion(+)

+

+Wen Hui in commit a5e0a64b0:

+ fix leak in error handling of debug populate command (#7062)

+ 1 file changed, 3 insertions(+), 4 deletions(-)

+

+Yossi Gottlieb in commit cbfdfa231:

+ Fix TLS cluster tests. (#7578)

+ 1 file changed, 4 insertions(+), 1 deletion(-)

+

+Yossi Gottlieb in commit 6d5376d30:

+ TLS: Propagate and handle SSL_new() failures. (#7576)

+ 4 files changed, 48 insertions(+), 6 deletions(-)

+

+Oran Agra in commit a662cd577:

+ Fix failing tests due to issues with wait_for_log_message (#7572)

+ 3 files changed, 38 insertions(+), 34 deletions(-)

+

+Jiayuan Chen in commit 2786a4b5e:

+ Add optional tls verification (#7502)

+ 6 files changed, 40 insertions(+), 5 deletions(-)

+

+Oran Agra in commit 3ef3d3612:

+ Daily github action: run cluster and sentinel tests with tls (#7575)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Yossi Gottlieb in commit f20f63322:

+ TLS: support cluster/replication without tls-port.

+ 2 files changed, 5 insertions(+), 4 deletions(-)

+

+grishaf in commit 3c9ae059d:

+ Fix prepareForShutdown function declaration (#7566)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Oran Agra in commit 3f4803af9:

+ Stabilize bgsave test that sometimes fails with valgrind (#7559)

+ 1 file changed, 20 insertions(+), 2 deletions(-)

+

+Madelyn Olson in commit 1a3c51a1f:

+ Properly reset errno for rdbLoad (#7542)

+ 1 file changed, 1 insertion(+)

+

+Oran Agra in commit 92d80b13a:

+ testsuite may leave servers alive on error (#7549)

+ 1 file changed, 3 insertions(+)

+

+Yossi Gottlieb in commit 245582ba7:

+ Tests: drop TCL 8.6 dependency. (#7548)

+ 1 file changed, 27 insertions(+), 22 deletions(-)

+

+Oran Agra in commit f20e1ba2d:

+ Fixes to release scripts (#7547)

+ 2 files changed, 2 insertions(+), 2 deletions(-)

+

+Remi Collet in commit 60ff56993:

+ Fix deprecated tail syntax in tests (#7543)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Wen Hui in commit 34e8541b9:

+ Add missing calls to raxStop (#7532)

+ 4 files changed, 63 insertions(+), 19 deletions(-)

+

+Wen Hui in commit 2f7bc5435:

+ add missing caching command in client help (#7399)

+ 1 file changed, 1 insertion(+)

+

+zhaozhao.zz in commit c15be9ffe:

+ replication: need handle -NOPERM error after send ping (#7538)

+ 1 file changed, 1 insertion(+)

+

+Scott Brenner in commit 1b29152c3:

+ GitHub Actions workflows - use latest version of actions/checkout (#7534)

+ 2 files changed, 10 insertions(+), 10 deletions(-)

+

+================================================================================

+Redis 6.0.6 Released Mon Jul 20 09:31:30 IDT 2020

+================================================================================

+

+Upgrade urgency MODERATE: several bugs with moderate impact are fixed here.

+

+The most important issues are listed here:

+

+* Fix crash when enabling CLIENT TRACKING with prefix

+* EXEC always fails with EXECABORT and multi-state is cleared

+* RESTORE ABSTTL won't store expired keys into the db

+* redis-cli better handling of non-pritable key names

+* TLS: Ignore client cert when tls-auth-clients off

+* Tracking: fix invalidation message on flush

+* Notify systemd on Sentinel startup

+* Fix crash on a misuse of STRALGO

+* Few fixes in module API

+* Fix a few rare leaks (STRALGO error misuse, Sentinel)

+* Fix a possible invalid access in defrag of scripts (unlikely to cause real harm)

+

+New features:

+

+* LPOS command to search in a list

+* Use user+pass for MIGRATE in redis-cli and redis-benchmark in cluster mode

+* redis-cli support TLS for --pipe, --rdb and --replica options

+* TLS: Session caching configuration support

+

+And this is the full list of commits:

+

+Itamar Haber in commit 50548cafc:

+ Adds SHA256SUM to redis-stable tarball upload

+ 1 file changed, 1 insertion(+)

+

+yoav-steinberg in commit 3a4c6684f:

+ Support passing stack allocated module strings to moduleCreateArgvFromUserFormat (#7528)

+ 1 file changed, 4 insertions(+), 1 deletion(-)

+

+Luke Palmer in commit 2fd0b2bd6:

+ Send null for invalidate on flush (#7469)

+ 1 file changed, 14 insertions(+), 10 deletions(-)

+

+dmurnane in commit c3c81e1a8:

+ Notify systemd on sentinel startup (#7168)

+ 1 file changed, 4 insertions(+)

+

+Developer-Ecosystem-Engineering in commit e2770f29b:

+ Add registers dump support for Apple silicon (#7453)

+ 1 file changed, 54 insertions(+), 2 deletions(-)

+

+Wen Hui in commit b068eae97:

+ correct error msg for num connections reaching maxclients in cluster mode (#7444)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+WuYunlong in commit e6169ae5c:

+ Fix command help for unexpected options (#7476)

+ 6 files changed, 20 insertions(+), 3 deletions(-)

+

+WuYunlong in commit abf08fc02:

+ Refactor RM_KeyType() by using macro. (#7486)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Oran Agra in commit 11b83076a:

+ diskless master disconnect replicas when rdb child failed (#7518)

+ 1 file changed, 6 insertions(+), 5 deletions(-)

+

+Oran Agra in commit 8f27f2f7d:

+ redis-cli tests, fix valgrind timing issue (#7519)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+WuYunlong in commit 180b588e8:

+ Fix out of update help info in tcl tests. (#7516)

+ 1 file changed, 2 deletions(-)

+

+Qu Chen in commit 417c60bdc:

+ Replica always reports master's config epoch in CLUSTER NODES output. (#7235)

+ 1 file changed, 5 insertions(+), 1 deletion(-)

+

+Oran Agra in commit 72a242419:

+ RESTORE ABSTTL skip expired keys - leak (#7511)

+ 1 file changed, 1 insertion(+)

+

+Oran Agra in commit 2ca45239f:

+ fix recently added time sensitive tests failing with valgrind (#7512)

+ 2 files changed, 12 insertions(+), 6 deletions(-)

+

+Oran Agra in commit 123dc8b21:

+ runtest --stop pause stops before terminating the redis server (#7513)

+ 2 files changed, 8 insertions(+), 2 deletions(-)

+

+Oran Agra in commit a6added45:

+ update release scripts for new hosts, and CI to run more tests (#7480)

+ 5 files changed, 68 insertions(+), 26 deletions(-)

+

+jimgreen2013 in commit cf4869f9e:

+ fix description about ziplist, the code is ok (#6318)

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+马永泽 in commit d548f219b:

+ fix benchmark in cluster mode fails to authenticate (#7488)

+ 1 file changed, 56 insertions(+), 40 deletions(-)

+

+Abhishek Soni in commit e58eb7b89:

+ fix: typo in CI job name (#7466)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Jiayuan Chen in commit 6def10a2b:

+ Fix typo in deps README (#7500)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+WuYunlong in commit 8af61afef:

+ Add missing latency-monitor tcl test to test_helper.tcl. (#6782)

+ 1 file changed, 1 insertion(+)

+

+Yossi Gottlieb in commit a419f400e:

+ TLS: Session caching configuration support. (#7420)

+ 6 files changed, 56 insertions(+), 16 deletions(-)

+

+Yossi Gottlieb in commit 2e4bb2667:

+ TLS: Ignore client cert when tls-auth-clients off. (#7457)

+ 1 file changed, 1 insertion(+), 3 deletions(-)

+

+James Hilliard in commit f0b1aee9e:

+ Use pkg-config to properly detect libssl and libcrypto libraries (#7452)

+ 1 file changed, 15 insertions(+), 3 deletions(-)

+

+Yossi Gottlieb in commit e92b99564:

+ TLS: Add missing redis-cli options. (#7456)

+ 3 files changed, 166 insertions(+), 52 deletions(-)

+

+Oran Agra in commit 1f3db5bf5:

+ redis-cli --hotkeys fixed to handle non-printable key names

+ 1 file changed, 11 insertions(+), 5 deletions(-)

+

+Oran Agra in commit c3044f369:

+ redis-cli --bigkeys fixed to handle non-printable key names

+ 1 file changed, 24 insertions(+), 16 deletions(-)

+

+Oran Agra in commit b3f75527b:

+ RESTORE ABSTTL won't store expired keys into the db (#7472)

+ 4 files changed, 46 insertions(+), 16 deletions(-)

+

+huangzhw in commit 6f87fc92f:

+ defrag.c activeDefragSdsListAndDict when defrag sdsele, We can't use (#7492)

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Oran Agra in commit d8e6a3e5b:

+ skip a test that uses +inf on valgrind (#7440)

+ 1 file changed, 12 insertions(+), 9 deletions(-)

+

+Oran Agra in commit 28fd1a110:

+ stabilize tests that look for log lines (#7367)

+ 3 files changed, 33 insertions(+), 11 deletions(-)

+

+Oran Agra in commit a513b4ed9:

+ tests/valgrind: don't use debug restart (#7404)

+ 4 files changed, 114 insertions(+), 57 deletions(-)

+

+Oran Agra in commit 70e72fc1b:

+ change references to the github repo location (#7479)

+ 5 files changed, 7 insertions(+), 7 deletions(-)

+

+zhaozhao.zz in commit c63e533cc:

+ BITOP: propagate only when it really SET or DEL targetkey (#5783)

+ 1 file changed, 2 insertions(+), 1 deletion(-)

+

+antirez in commit 31040ff54:

+ Update comment to clarify change in #7398.

+ 1 file changed, 4 insertions(+), 1 deletion(-)

+

+antirez in commit b605fe827:

+ LPOS: option FIRST renamed RANK.

+ 2 files changed, 19 insertions(+), 19 deletions(-)

+

+Dave Nielsen in commit 8deb24954:

+ updated copyright year

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+Oran Agra in commit a61c2930c:

+ EXEC always fails with EXECABORT and multi-state is cleared

+ 6 files changed, 204 insertions(+), 91 deletions(-)

+

+antirez in commit 3c8041637:

+ Include cluster.h for getClusterConnectionsCount().

+ 1 file changed, 1 insertion(+)

+

+antirez in commit 5be673ee8:

+ Fix BITFIELD i64 type handling, see #7417.

+ 1 file changed, 8 insertions(+), 6 deletions(-)

+

+antirez in commit 5f289df9b:

+ Clarify maxclients and cluster in conf. Remove myself too.

+ 2 files changed, 9 insertions(+), 1 deletion(-)

+

+hwware in commit 000f928d6:

+ fix memory leak in sentinel connection sharing

+ 1 file changed, 1 insertion(+)

+

+chenhui0212 in commit d9a3c0171:

+ Fix comments in function raxLowWalk of listpack.c

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+Tomasz Poradowski in commit 7526e4506:

+ ensure SHUTDOWN_NOSAVE in Sentinel mode

+ 2 files changed, 9 insertions(+), 8 deletions(-)

+

+chenhui0212 in commit 6487cbc33:

+ fix comments in listpack.c

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+

+antirez in commit 69b66bfca:

+ Use cluster connections too, to limit maxclients.

+ 3 files changed, 23 insertions(+), 8 deletions(-)

+

+antirez in commit 5a960a033:

+ Tracking: fix enableBcastTrackingForPrefix() invalid sdslen() call.

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+root in commit 1c2e50de3:

+ cluster.c remove if of clusterSendFail in markNodeAsFailingIfNeeded

+ 1 file changed, 1 insertion(+), 1 deletion(-)

+

+meir@redislabs.com in commit 040efb697:

+ Fix RM_ScanKey module api not to return int encoded strings

+ 3 files changed, 24 insertions(+), 7 deletions(-)

+

+antirez in commit 1b8b7941d:

+ Fix LCS object type checking. Related to #7379.

+ 1 file changed, 17 insertions(+), 10 deletions(-)

+

+hwware in commit 6b571b45a:

+ fix memory leak

+ 1 file changed, 11 insertions(+), 12 deletions(-)

+

+hwware in commit 674759062:

+ fix server crash in STRALGO command

+ 1 file changed, 7 insertions(+)

+

+Benjamin Sergeant in commit a05ffefdc:

+ Update redis-cli.c

+ 1 file changed, 19 insertions(+), 6 deletions(-)

+

+Jamie Scott in commit 870b63733:

+ minor fix

+ 1 file changed, 2 insertions(+), 3 deletions(-)

+

================================================================================

Redis 6.0.5 Released Tue Jun 09 11:56:08 CEST 2020

================================================================================

diff --git a/BUGS b/BUGS

index a8e936892..7af259340 100644

--- a/BUGS

+++ b/BUGS

@@ -1 +1 @@

-Please check https://github.com/antirez/redis/issues

+Please check https://github.com/redis/redis/issues

diff --git a/CONTRIBUTING b/CONTRIBUTING

deleted file mode 100644

index 000edbeaf..000000000

--- a/CONTRIBUTING

+++ /dev/null

@@ -1,50 +0,0 @@

-Note: by contributing code to the Redis project in any form, including sending

-a pull request via Github, a code fragment or patch via private email or

-public discussion groups, you agree to release your code under the terms

-of the BSD license that you can find in the COPYING file included in the Redis

-source distribution. You will include BSD license in the COPYING file within

-each source file that you contribute.

-

-# IMPORTANT: HOW TO USE REDIS GITHUB ISSUES

-

-* Github issues SHOULD ONLY BE USED to report bugs, and for DETAILED feature

- requests. Everything else belongs to the Redis Google Group:

-

- https://groups.google.com/forum/m/#!forum/Redis-db

-

- PLEASE DO NOT POST GENERAL QUESTIONS that are not about bugs or suspected

- bugs in the Github issues system. We'll be very happy to help you and provide

- all the support in the mailing list.

-

- There is also an active community of Redis users at Stack Overflow:

-

- http://stackoverflow.com/questions/tagged/redis

-

-# How to provide a patch for a new feature

-

-1. If it is a major feature or a semantical change, please don't start coding

-straight away: if your feature is not a conceptual fit you'll lose a lot of

-time writing the code without any reason. Start by posting in the mailing list

-and creating an issue at Github with the description of, exactly, what you want

-to accomplish and why. Use cases are important for features to be accepted.

-Here you'll see if there is consensus about your idea.

-

-2. If in step 1 you get an acknowledgment from the project leaders, use the

- following procedure to submit a patch:

-

- a. Fork Redis on github ( http://help.github.com/fork-a-repo/ )

- b. Create a topic branch (git checkout -b my_branch)

- c. Push to your branch (git push origin my_branch)

- d. Initiate a pull request on github ( https://help.github.com/articles/creating-a-pull-request/ )

- e. Done :)

-

-3. Keep in mind that we are very overloaded, so issues and PRs sometimes wait

-for a *very* long time. However this is not lack of interest, as the project

-gets more and more users, we find ourselves in a constant need to prioritize

-certain issues/PRs over others. If you think your issue/PR is very important

-try to popularize it, have other users commenting and sharing their point of

-view and so forth. This helps.

-

-4. For minor fixes just open a pull request on Github.

-

-Thanks!

diff --git a/COPYING b/COPYING

index 3a9a7a66f..e1e5dd654 100644

--- a/COPYING

+++ b/COPYING

@@ -1,5 +1,5 @@

-Copyright (c) 2006-2015, Salvatore Sanfilippo

-Copyright (C) 2019, John Sully

+Copyright (c) 2006-2020, Salvatore Sanfilippo

+Copyright (C) 2019-2020, John Sully

All rights reserved.

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

diff --git a/README.md b/README.md

index fe0030dff..1decad4b0 100644

--- a/README.md

+++ b/README.md

@@ -8,6 +8,8 @@

##### Have feedback? Take our quick survey: https://www.surveymonkey.com/r/Y9XNS93

+##### KeyDB is Hiring! We are currently building out our dev team. If you are interested please see the posting here: https://keydb.dev/careers.html

+

What is KeyDB?

--------------

@@ -15,11 +17,13 @@ KeyDB is a high performance fork of Redis with a focus on multithreading, memory

KeyDB maintains full compatibility with the Redis protocol, modules, and scripts. This includes the atomicity guarantees for scripts and transactions. Because KeyDB keeps in sync with Redis development KeyDB is a superset of Redis functionality, making KeyDB a drop in replacement for existing Redis deployments.

-On the same hardware KeyDB can perform twice as many queries per second as Redis, with 60% lower latency. Active-Replication simplifies hot-spare failover allowing you to easily distribute writes over replicas and use simple TCP based load balancing/failover. KeyDB's higher performance allows you to do more on less hardware which reduces operation costs and complexity.

+On the same hardware KeyDB can achieve significantly higher throughput than Redis. Active-Replication simplifies hot-spare failover allowing you to easily distribute writes over replicas and use simple TCP based load balancing/failover. KeyDB's higher performance allows you to do more on less hardware which reduces operation costs and complexity.

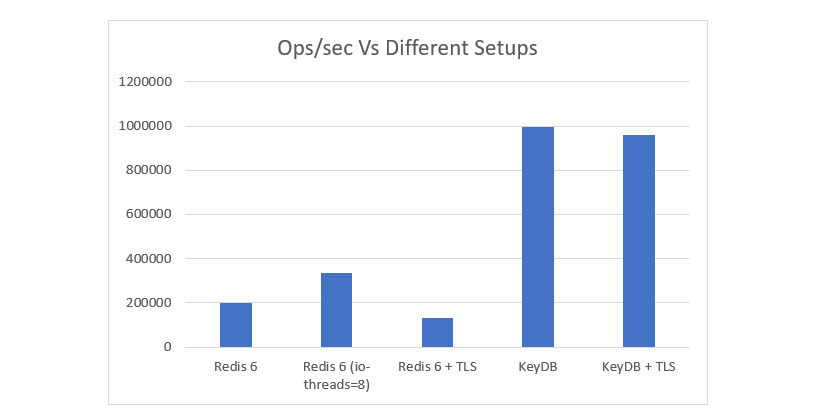

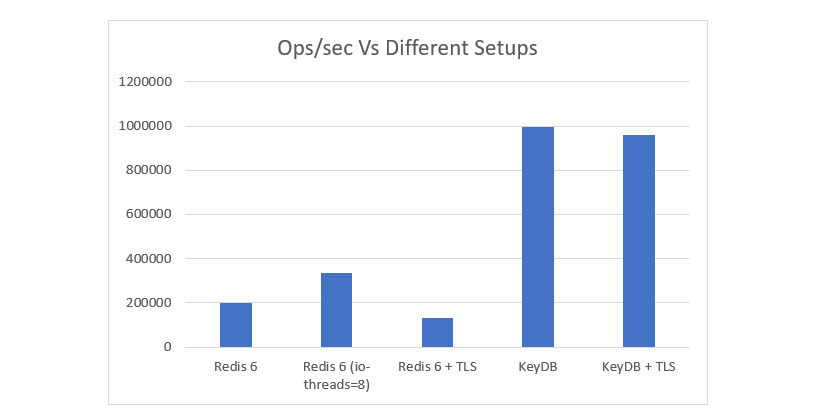

- +The chart below compares several KeyDB and Redis setups, including the latest Redis6 io-threads option, and TLS benchmarks.

-See the full benchmark results and setup information here: https://docs.keydb.dev/blog/2019/10/07/blog-post/

+

+The chart below compares several KeyDB and Redis setups, including the latest Redis6 io-threads option, and TLS benchmarks.

-See the full benchmark results and setup information here: https://docs.keydb.dev/blog/2019/10/07/blog-post/

+ +

+See the full benchmark results and setup information here: https://docs.keydb.dev/blog/2020/09/29/blog-post/

Why fork Redis?

---------------

@@ -82,6 +86,8 @@ Building KeyDB

KeyDB can be compiled and is tested for use on Linux. KeyDB currently relies on SO_REUSEPORT's load balancing behavior which is available only in Linux. When we support marshalling connections across threads we plan to support other operating systems such as FreeBSD.

+More on CentOS/Archlinux/Alpine/Debian/Ubuntu dependencies and builds can be found here: https://docs.keydb.dev/docs/build/

+

Install dependencies:

% sudo apt install build-essential nasm autotools-dev autoconf libjemalloc-dev tcl tcl-dev uuid-dev libcurl4-openssl-dev

@@ -95,9 +101,14 @@ libssl-dev on Debian/Ubuntu) and run:

% make BUILD_TLS=yes

-You can enable flash support with:

+To build with systemd support, you'll need systemd development libraries (such

+as libsystemd-dev on Debian/Ubuntu or systemd-devel on CentOS) and run:

- % make MALLOC=memkind

+ % make USE_SYSTEMD=yes

+

+To append a suffix to KeyDB program names, use:

+

+ % make PROG_SUFFIX="-alt"

***Note that the following dependencies may be needed:

% sudo apt-get install autoconf autotools-dev libnuma-dev libtool

@@ -112,7 +123,7 @@ installed):

Fixing build problems with dependencies or cached build options

---------

-KeyDB has some dependencies which are included into the `deps` directory.

+KeyDB has some dependencies which are included in the `deps` directory.

`make` does not automatically rebuild dependencies even if something in

the source code of dependencies changes.

@@ -139,7 +150,7 @@ with a 64 bit target, or the other way around, you need to perform a

In case of build errors when trying to build a 32 bit binary of KeyDB, try

the following steps:

-* Install the packages libc6-dev-i386 (also try g++-multilib).

+* Install the package libc6-dev-i386 (also try g++-multilib).

* Try using the following command line instead of `make 32bit`:

`make CFLAGS="-m32 -march=native" LDFLAGS="-m32"`

@@ -164,14 +175,14 @@ Verbose build

-------------

KeyDB will build with a user friendly colorized output by default.

-If you want to see a more verbose output use the following:

+If you want to see a more verbose output, use the following:

% make V=1

Running KeyDB

-------------

-To run KeyDB with the default configuration just type:

+To run KeyDB with the default configuration, just type:

% cd src

% ./keydb-server

@@ -224,7 +235,7 @@ You can find the list of all the available commands at https://docs.keydb.dev/do

Installing KeyDB

-----------------

-In order to install KeyDB binaries into /usr/local/bin just use:

+In order to install KeyDB binaries into /usr/local/bin, just use:

% make install

@@ -233,8 +244,8 @@ different destination.

Make install will just install binaries in your system, but will not configure

init scripts and configuration files in the appropriate place. This is not

-needed if you want just to play a bit with KeyDB, but if you are installing

-it the proper way for a production system, we have a script doing this

+needed if you just want to play a bit with KeyDB, but if you are installing

+it the proper way for a production system, we have a script that does this

for Ubuntu and Debian systems:

% cd utils

diff --git a/deps/README.md b/deps/README.md

index 685dbb40d..02c99052f 100644

--- a/deps/README.md

+++ b/deps/README.md

@@ -21,7 +21,7 @@ just following tose steps:

1. Remove the jemalloc directory.

2. Substitute it with the new jemalloc source tree.

-3. Edit the Makefile localted in the same directory as the README you are

+3. Edit the Makefile located in the same directory as the README you are

reading, and change the --with-version in the Jemalloc configure script

options with the version you are using. This is required because otherwise

Jemalloc configuration script is broken and will not work nested in another

@@ -33,7 +33,7 @@ If you want to upgrade Jemalloc while also providing support for

active defragmentation, in addition to the above steps you need to perform

the following additional steps:

-5. In Jemalloc three, file `include/jemalloc/jemalloc_macros.h.in`, make sure

+5. In Jemalloc tree, file `include/jemalloc/jemalloc_macros.h.in`, make sure

to add `#define JEMALLOC_FRAG_HINT`.

6. Implement the function `je_get_defrag_hint()` inside `src/jemalloc.c`. You

can see how it is implemented in the current Jemalloc source tree shipped

@@ -47,9 +47,9 @@ Hiredis

Hiredis uses the SDS string library, that must be the same version used inside Redis itself. Hiredis is also very critical for Sentinel. Historically Redis often used forked versions of hiredis in a way or the other. In order to upgrade it is advised to take a lot of care:

1. Check with diff if hiredis API changed and what impact it could have in Redis.

-2. Make sure thet the SDS library inside Hiredis and inside Redis are compatible.

+2. Make sure that the SDS library inside Hiredis and inside Redis are compatible.

3. After the upgrade, run the Redis Sentinel test.

-4. Check manually that redis-cli and redis-benchmark behave as expecteed, since we have no tests for CLI utilities currently.

+4. Check manually that redis-cli and redis-benchmark behave as expected, since we have no tests for CLI utilities currently.

Linenoise

---

@@ -77,6 +77,6 @@ and our version:

1. Makefile is modified to allow a different compiler than GCC.

2. We have the implementation source code, and directly link to the following external libraries: `lua_cjson.o`, `lua_struct.o`, `lua_cmsgpack.o` and `lua_bit.o`.

-3. There is a security fix in `ldo.c`, line 498: The check for `LUA_SIGNATURE[0]` is removed in order toa void direct bytecode execution.

+3. There is a security fix in `ldo.c`, line 498: The check for `LUA_SIGNATURE[0]` is removed in order to avoid direct bytecode execution.

diff --git a/deps/linenoise/linenoise.c b/deps/linenoise/linenoise.c

index cfe51e768..ccf5c5548 100644

--- a/deps/linenoise/linenoise.c

+++ b/deps/linenoise/linenoise.c

@@ -625,7 +625,7 @@ static void refreshMultiLine(struct linenoiseState *l) {

rpos2 = (plen+l->pos+l->cols)/l->cols; /* current cursor relative row. */

lndebug("rpos2 %d", rpos2);

- /* Go up till we reach the expected positon. */

+ /* Go up till we reach the expected position. */

if (rows-rpos2 > 0) {

lndebug("go-up %d", rows-rpos2);

snprintf(seq,64,"\x1b[%dA", rows-rpos2);

@@ -767,7 +767,7 @@ void linenoiseEditBackspace(struct linenoiseState *l) {

}

}

-/* Delete the previosu word, maintaining the cursor at the start of the

+/* Delete the previous word, maintaining the cursor at the start of the

* current word. */

void linenoiseEditDeletePrevWord(struct linenoiseState *l) {

size_t old_pos = l->pos;

diff --git a/deps/lua/src/ldo.c b/deps/lua/src/ldo.c

index 514f7a2a3..939940a4c 100644

--- a/deps/lua/src/ldo.c

+++ b/deps/lua/src/ldo.c

@@ -274,7 +274,7 @@ int luaD_precall (lua_State *L, StkId func, int nresults) {

CallInfo *ci;

StkId st, base;

Proto *p = cl->p;

- luaD_checkstack(L, p->maxstacksize);

+ luaD_checkstack(L, p->maxstacksize + p->numparams);

func = restorestack(L, funcr);

if (!p->is_vararg) { /* no varargs? */

base = func + 1;

diff --git a/keydb.conf b/keydb.conf

index e8ca634b7..3874c5acd 100644

--- a/keydb.conf

+++ b/keydb.conf

@@ -24,7 +24,7 @@

# to customize a few per-server settings. Include files can include

# other files, so use this wisely.

#

-# Notice option "include" won't be rewritten by command "CONFIG REWRITE"

+# Note that option "include" won't be rewritten by command "CONFIG REWRITE"

# from admin or KeyDB Sentinel. Since KeyDB always uses the last processed

# line as value of a configuration directive, you'd better put includes

# at the beginning of this file to avoid overwriting config change at runtime.

@@ -46,7 +46,7 @@

################################## NETWORK #####################################

# By default, if no "bind" configuration directive is specified, KeyDB listens

-# for connections from all the network interfaces available on the server.

+# for connections from all available network interfaces on the host machine.

# It is possible to listen to just one or multiple selected interfaces using

# the "bind" configuration directive, followed by one or more IP addresses.

#

@@ -58,13 +58,12 @@

# ~~~ WARNING ~~~ If the computer running KeyDB is directly exposed to the

# internet, binding to all the interfaces is dangerous and will expose the

# instance to everybody on the internet. So by default we uncomment the

-# following bind directive, that will force KeyDB to listen only into

-# the IPv4 loopback interface address (this means KeyDB will be able to

-# accept connections only from clients running into the same computer it

-# is running).

+# following bind directive, that will force KeyDB to listen only on the

+# IPv4 loopback interface address (this means KeyDB will only be able to

+# accept client connections from the same host that it is running on).

#

# IF YOU ARE SURE YOU WANT YOUR INSTANCE TO LISTEN TO ALL THE INTERFACES

-# JUST COMMENT THE FOLLOWING LINE.

+# JUST COMMENT OUT THE FOLLOWING LINE.

# ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

bind 127.0.0.1

@@ -93,8 +92,8 @@ port 6379

# TCP listen() backlog.

#

-# In high requests-per-second environments you need an high backlog in order

-# to avoid slow clients connections issues. Note that the Linux kernel

+# In high requests-per-second environments you need a high backlog in order

+# to avoid slow clients connection issues. Note that the Linux kernel

# will silently truncate it to the value of /proc/sys/net/core/somaxconn so

# make sure to raise both the value of somaxconn and tcp_max_syn_backlog

# in order to get the desired effect.

@@ -118,8 +117,8 @@ timeout 0

# of communication. This is useful for two reasons:

#

# 1) Detect dead peers.

-# 2) Take the connection alive from the point of view of network

-# equipment in the middle.

+# 2) Force network equipment in the middle to consider the connection to be

+# alive.

#

# On Linux, the specified value (in seconds) is the period used to send ACKs.

# Note that to close the connection the double of the time is needed.

@@ -159,11 +158,14 @@ tcp-keepalive 300

# By default, clients (including replica servers) on a TLS port are required

# to authenticate using valid client side certificates.

#

-# It is possible to disable authentication using this directive.

+# If "no" is specified, client certificates are not required and not accepted.

+# If "optional" is specified, client certificates are accepted and must be

+# valid if provided, but are not required.

#

# tls-auth-clients no

+# tls-auth-clients optional

-# By default, a Redis replica does not attempt to establish a TLS connection

+# By default, a KeyDB replica does not attempt to establish a TLS connection

# with its master.

#

# Use the following directive to enable TLS on replication links.

@@ -225,11 +227,12 @@ daemonize no

# supervision tree. Options:

# supervised no - no supervision interaction

# supervised upstart - signal upstart by putting KeyDB into SIGSTOP mode

+# requires "expect stop" in your upstart job config

# supervised systemd - signal systemd by writing READY=1 to $NOTIFY_SOCKET

# supervised auto - detect upstart or systemd method based on

# UPSTART_JOB or NOTIFY_SOCKET environment variables

# Note: these supervision methods only signal "process is ready."

-# They do not enable continuous liveness pings back to your supervisor.

+# They do not enable continuous pings back to your supervisor.

supervised no

# If a pid file is specified, KeyDB writes it where specified at startup

@@ -279,6 +282,9 @@ databases 16

# ASCII art logo in startup logs by setting the following option to yes.

always-show-logo yes

+# Retrieving "message of today" using CURL requests.

+#enable-motd yes

+

################################ SNAPSHOTTING ################################

#

# Save the DB on disk:

@@ -288,7 +294,7 @@ always-show-logo yes

# Will save the DB if both the given number of seconds and the given

# number of write operations against the DB occurred.

#

-# In the example below the behaviour will be to save:

+# In the example below the behavior will be to save:

# after 900 sec (15 min) if at least 1 key changed

# after 300 sec (5 min) if at least 10 keys changed

# after 60 sec if at least 10000 keys changed

@@ -321,7 +327,7 @@ save 60 10000

stop-writes-on-bgsave-error yes

# Compress string objects using LZF when dump .rdb databases?

-# For default that's set to 'yes' as it's almost always a win.

+# By default compression is enabled as it's almost always a win.

# If you want to save some CPU in the saving child set it to 'no' but

# the dataset will likely be bigger if you have compressible values or keys.

rdbcompression yes

@@ -409,11 +415,11 @@ dir ./

# still reply to client requests, possibly with out of date data, or the

# data set may just be empty if this is the first synchronization.

#

-# 2) if replica-serve-stale-data is set to 'no' the replica will reply with

-# an error "SYNC with master in progress" to all the kind of commands

-# but to INFO, replicaOF, AUTH, PING, SHUTDOWN, REPLCONF, ROLE, CONFIG,

-# SUBSCRIBE, UNSUBSCRIBE, PSUBSCRIBE, PUNSUBSCRIBE, PUBLISH, PUBSUB,

-# COMMAND, POST, HOST: and LATENCY.

+# 2) If replica-serve-stale-data is set to 'no' the replica will reply with

+# an error "SYNC with master in progress" to all commands except:

+# INFO, REPLICAOF, AUTH, PING, SHUTDOWN, REPLCONF, ROLE, CONFIG, SUBSCRIBE,

+# UNSUBSCRIBE, PSUBSCRIBE, PUNSUBSCRIBE, PUBLISH, PUBSUB, COMMAND, POST,

+# HOST and LATENCY.

#

replica-serve-stale-data yes

@@ -491,14 +497,14 @@ repl-diskless-sync-delay 5

# -----------------------------------------------------------------------------

# WARNING: RDB diskless load is experimental. Since in this setup the replica

# does not immediately store an RDB on disk, it may cause data loss during

-# failovers. RDB diskless load + Redis modules not handling I/O reads may also

-# cause Redis to abort in case of I/O errors during the initial synchronization

+# failovers. RDB diskless load + KeyDB modules not handling I/O reads may also

+# cause KeyDB to abort in case of I/O errors during the initial synchronization

# stage with the master. Use only if your do what you are doing.

# -----------------------------------------------------------------------------

#

# Replica can load the RDB it reads from the replication link directly from the

# socket, or store the RDB to a file and read that file after it was completely

-# recived from the master.

+# received from the master.

#

# In many cases the disk is slower than the network, and storing and loading

# the RDB file may increase replication time (and even increase the master's

@@ -528,7 +534,8 @@ repl-diskless-load disabled

#

# It is important to make sure that this value is greater than the value

# specified for repl-ping-replica-period otherwise a timeout will be detected

-# every time there is low traffic between the master and the replica.

+# every time there is low traffic between the master and the replica. The default

+# value is 60 seconds.

#

# repl-timeout 60

@@ -553,28 +560,28 @@ repl-disable-tcp-nodelay no

# partial resync is enough, just passing the portion of data the replica

# missed while disconnected.

#

-# The bigger the replication backlog, the longer the time the replica can be

-# disconnected and later be able to perform a partial resynchronization.

+# The bigger the replication backlog, the longer the replica can endure the

+# disconnect and later be able to perform a partial resynchronization.

#

-# The backlog is only allocated once there is at least a replica connected.

+# The backlog is only allocated if there is at least one replica connected.

#

# repl-backlog-size 1mb

-# After a master has no longer connected replicas for some time, the backlog

-# will be freed. The following option configures the amount of seconds that

-# need to elapse, starting from the time the last replica disconnected, for

-# the backlog buffer to be freed.

+# After a master has no connected replicas for some time, the backlog will be

+# freed. The following option configures the amount of seconds that need to

+# elapse, starting from the time the last replica disconnected, for the backlog

+# buffer to be freed.

#